Power plants are challenged to generate value from their data, but this can be a tedious and slow process, with uncertain outcomes. Now, as shown in these use cases, data analytic solutions can put innovation in the hands of process engineers and experts for rapid and useful insights.

Most power plants have tremendous amounts of data stored in their historians, asset management systems, and/or control and monitoring systems. Plant operations and maintenance can be greatly improved by turning this data into actionable information, but this has proven to be easier said than done for many plant operators, due to a variety of issues.

Because of long operating lifetimes, power generation plants can lag in the adoption of modern data analytic and other solutions to improve operations and maintenance. Many facilities still have what they started with in terms of automation hardware and software systems, based on the refresh cycle of their main control system.

A good example is the data analytics software plants use to improve operations and maintenance through root cause analysis, asset optimization, report generation, and more. The default data analytics approach in most plants today is the spreadsheet, the go-to tool for process engineers in every industry, and now more than 30 years old. While it provides unquestionable flexibility and power, this general-purpose tool lags in the innovations that have been introduced in information technology (IT) departments, and even in consumers’ lives.

In particular, one often sees frustration with older software solutions among younger employees who show up with a lifetime of computer experience, and wonder at the lack of innovation in the tools provided to them for data analytics. Spreadsheets and macros in a world of Google and Alexa just don’t make sense to this audience.

To compound the issue, there is more data to work with (Figure 1), more demand to get insights from data, and more technology—creating a gap between the status quo spreadsheet and the potential for improvement.

|

| 1. DRIP. Power plant personnel often find themselves drowning in data, but lacking in the information required to improve operations and maintenance. Courtesy: Seeq Corp. |

The data collection capabilities provided by process instrumentation, coupled with improved methods of networking and storage, have created an environment where companies accumulate vast amounts of time-series data from plant operations, labs, suppliers, and other sources. Together, these data source

Deriving Value from Big Data

Realizing that all this data is available—and being bombarded with stories about how other industries are deriving value from big data—C-level executives and plant managers are demanding and expecting more insights, faster, to drive improvements in plant operations and maintenance. These improvements could be increased asset availability via predictive analytics, improving compliance through monitoring of key metrics, or simply greater productivity when accessing contextualized data as input into plans, models, and budgets.

This is the managerial burden of the “big data” era, where awareness of innovation has created pressure to take advantage of it. Unfortunately, full utilization of many of the big data solutions available today requires extensive programming expertise and knowledge of data science, to say nothing of IT and other department costs to implement and manage—resulting in high failure rates during implementation.

In addition, there are the waves of technology innovation that have dominated headlines in recent years including big data, industrial internet of things, open source, cognitive computing, and the cloud. As referenced earlier, we live in a consumer and IT world dominated by advanced user experiences. The difference between what we do every day when using Google, Amazon, and other modern experiences—and the spreadsheet-based analytics solutions available to engineers in power generation facilities—is painfully obvious.

Further, these consumer and IT experiences have closed the required experience gap in terms of usability, in addition to providing powerful functionality. Users don’t have to be a programmer to use Google or a data scientist to use Amazon Alexa, because these technology solutions are wrapped in easy-to-use experiences.

Beyond Spreadsheets to Modern Data Analytics

Examining these trends, it becomes obvious that it’s time for a new era in data analytics solutions for process manufacturing in general, and power plants in particular. The spreadsheet may live forever for some types of analysis, but there need to be better solutions for data analytics that go beyond the limited expectations defined by legacy software technology, with employees working alone to produce paper reports.

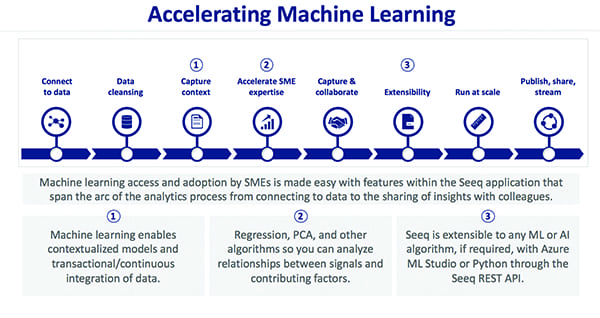

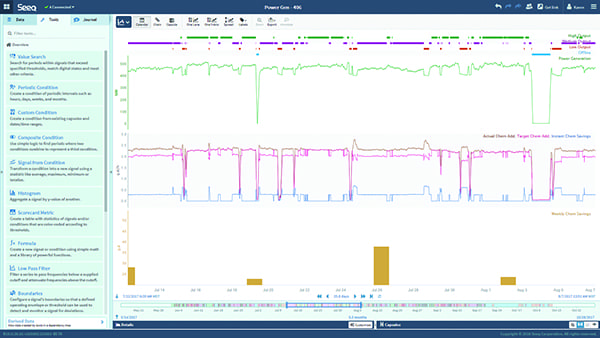

There are new technologies and solutions that accelerate machine learning to enable faster insights for improved process and business outcomes (Figure 2). These solutions bridge the gap among innovations, organizational needs, and access to insights by leveraging technologies like machine learning and web-based deployment, while remaining accessible to process engineers and experts. Figure 3 is an example of how big data can be visually depicted by data analytics software such that it is easily understood by engineers.

s contain potential insights into the operation and maintenance of virtually every major item of equipment and every important process in a typical power plant.

This article will use multiple examples to show how power plants are using data analytics to improve operations and maintenance, thus providing a road map for facilities looking to implement similar programs. But first, let’s look at some of the pressure points forcing power plants to start creating value from their data.

https://www.powermag.com/using-data-analytics-to-improve-operations-and-maintenance/